|

I am a second-year master student at school of EIC, Huazhong University of Science and Technology, advised by Prof. Wenyu Liu and Prof. Xinggang Wang. Before this, I got a B.E. degree of computer science at school of EIC, Huazhong University of Science and Technology. |

|

|

|

My research interests are efficient neural rendering technology, including: 3D generation, dynamic and static scene neural fields, human body rendering, etc. * indicates equal contribution |

|

|

Taoran Yi, Jiemin Fang, Zanwei Zhou, Junjie Wang, Guanjun Wu, Lingxi Xie, Xiaopeng Zhang, Wenyu Liu, Xinggang Wang, Qi Tian arXiv, 2024 [Paper] [Page] [Code] Aiming at highly enhancing the generation quality, we propose a novel framework named GaussianDreamerPro. The main idea is to bind Gaussians to reasonable geometry, which evolves over the whole generation process. Along different stages of our framework, both the geometry and appearance can be enriched progressively. The final output asset is constructed with 3D Gaussians bound to mesh, which shows significantly enhanced details and quality compared with previous methods. Notably, the generated asset can also be seamlessly integrated into downstream manipulation pipelines, e.g. animation, composition, and simulation etc., greatly promoting its potential in wide applications. |

|

Guanjun Wu*, Taoran Yi*, Jiemin Fang, Lingxi Xie, Xiaopeng Zhang, Wei Wei, Wenyu Liu, Qi Tian, Xinggang Wang CVPR, 2024 [Paper] [Page] [Code] We introduce the 4D Gaussian Splatting (4D-GS) to achieve real-time dynamic scene rendering while also enjoying high training and storage efficiency. Our 4D-GS method achieves real-time rendering under high resolutions, 70 FPS at a 800*800 resolution on an RTX 3090 GPU, while maintaining comparable or higher quality than previous state-of-the-art methods. |

|

Taoran Yi, Jiemin Fang, Junjie Wang, Guanjun Wu, Lingxi Xie, Xiaopeng Zhang, Wenyu Liu, Qi Tian, Xinggang Wang CVPR, 2024 [Paper] [Page] [Code] A fast 3D generation framework, named as GaussianDreamer, is proposed, where the 3D diffusion model provides point cloud priors for initialization and the 2D diffusion model enriches the geometry and appearance. Our GaussianDreamer can generate a high-quality 3D instance within 25 minutes on one GPU, much faster than previous methods, while the generated instances can be directly rendered in real time. |

|

Guanjun Wu*, Taoran Yi*, Jiemin Fang, Wenyu Liu, Xinggang Wang 3DV, 2024 [Paper] [Page] [Code] [Data] we propose a dynamic HDR NeRF framework, named as HDR-HexPlane, which can learn 3D scenes from dynamic 2D images captured with various exposures.With the proposed model, high-quality novel-view images at any time point can be rendered with any desired exposure. We further construct a dataset containing multiple dynamic scenes captured with diverse exposures for evaluation. |

|

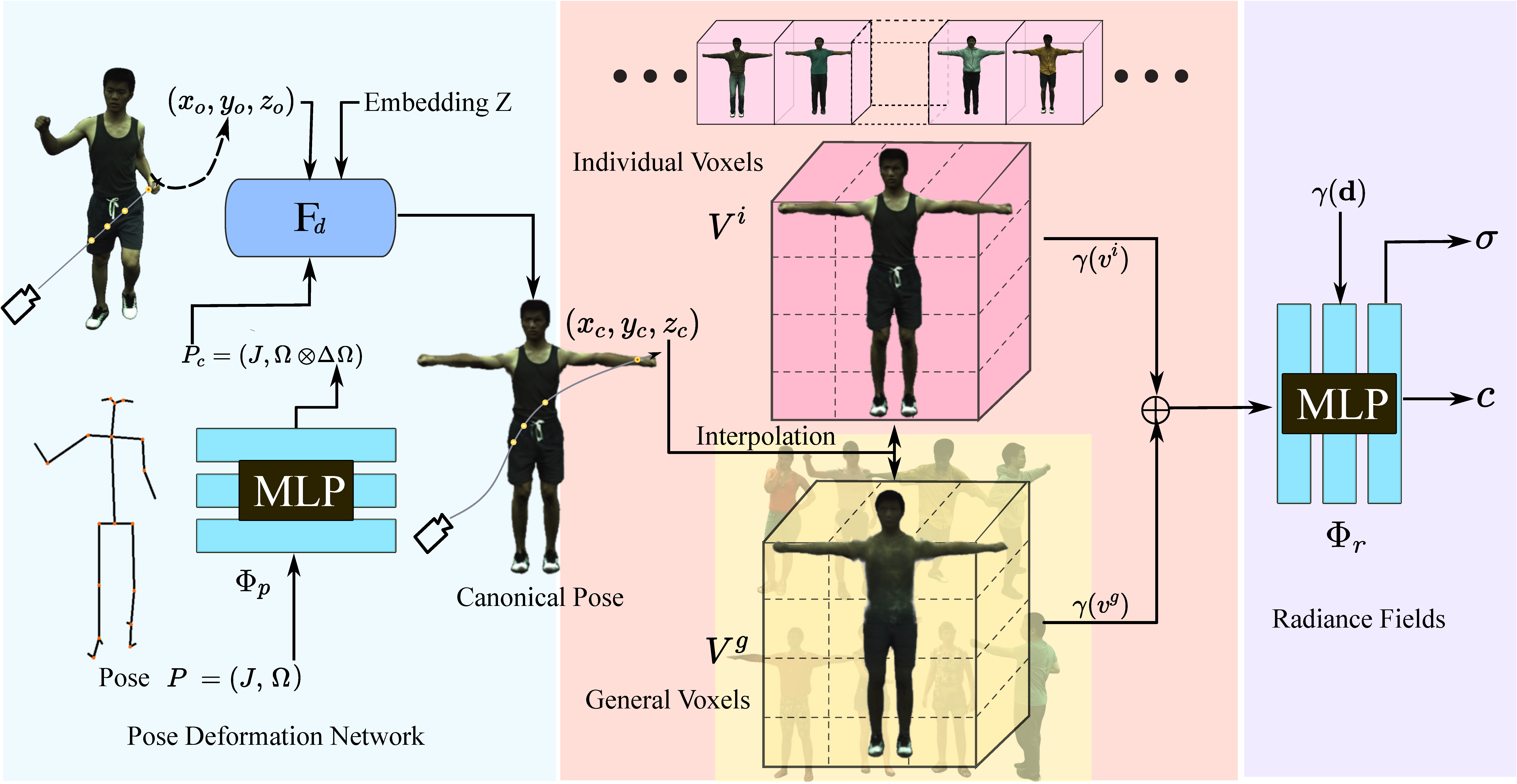

Taoran Yi*, Jiemin Fang*, Xinggang Wang, Wenyu Liu arxiv, 2023 [Paper] [Page] [Code] In this paper, we propose a rendering framework that can learn moving human body structures extremely quickly from a monocular video, named as GNeuVox. Our method shows significantly higher training efficiency compared with previous methods, while maintaining similar rendering quality. |

|

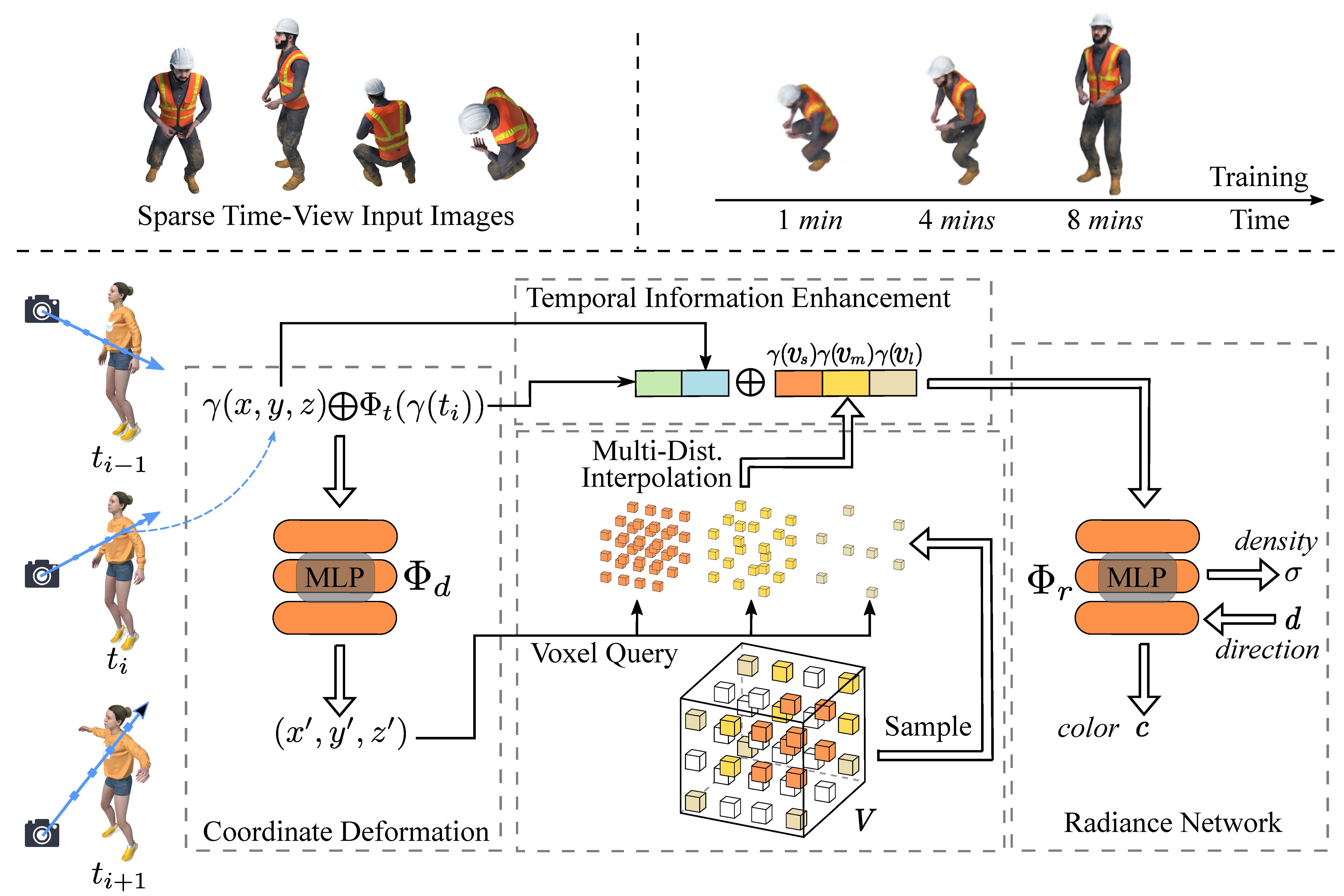

Jiemin Fang*, Taoran Yi*, Xinggang Wang, Lingxi Xie, Xiaopeng Zhang, Wenyu Liu, Matthias Nießner, Qi Tian SIGGRAPH Asia Conference Papers, 2022 [Paper] [Page] [Code] TiNeuVox completes training with only 8 minutes and 8-MB storage cost while showing similar or even better rendering performance than previous dynamic NeRF methods. |

|

Template is adapted from Here

|